Day 24: Putting it all together

Part of the Advent of Grok {Shan, Shui}*. See blog post for intro and table of contents.

On day 1, I stated two goals for this advent-of-grokking:

- To develop intuitions on how the procedural art generation works, which I knew almost nothing about before the project start

- To test intuitions about my approach to mostly-declarative code via carefully chosen language atoms and chaining of computations

I am reasonably happy in both regards: the approximate understanding of how it all done did emerged of this project—and that’s what the rest of today’s text would be dedicated to. As for the second goal, I indeed tested my approaches, and found them working, even if not as impressively as I initially hoped. I half-expected I’ll be able to distill every step of the algorithm (planning the landscape→filling the mountain→drawing the tree or house or boat) to exactly one fully declarative statement—but understood it is an overestimation of potential expressiveness. Still, the approach was battle-tested on the area foreign to me, and I have things to think about.

Following is my attempt to explain what I understood through the previous 23 days, spending roughly 40 to 90 mins per day for code reading, rewriting, and explaining in this diary.

How the {Shan, Shui}* works

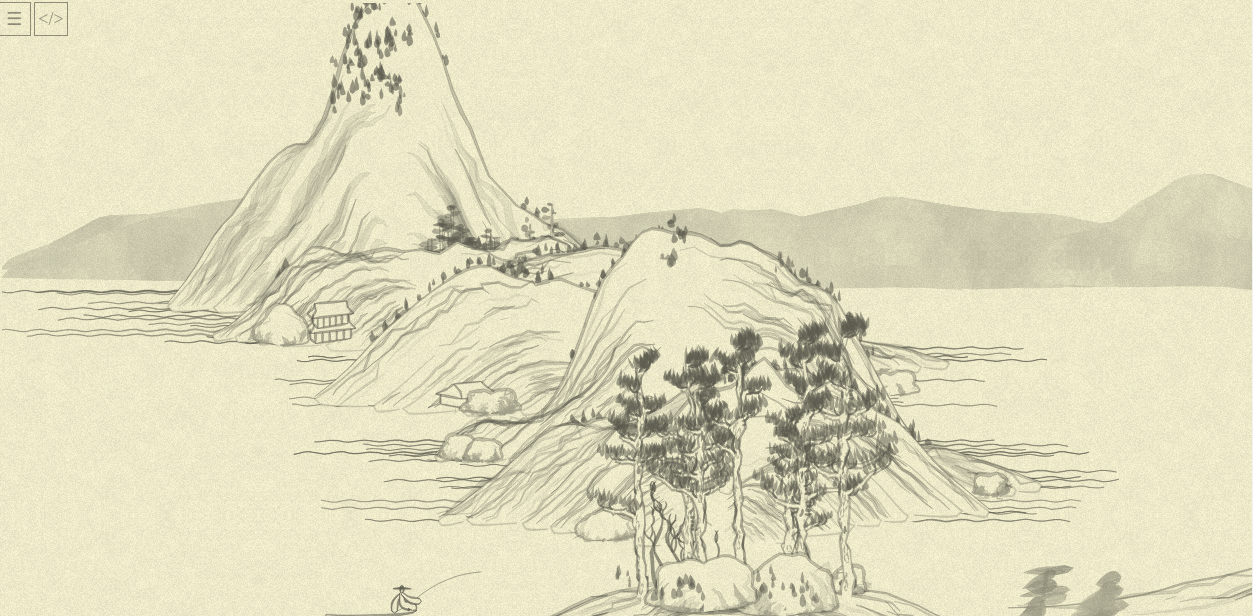

Here is the beginning of the infinite scroll I’ve investigated.

It is rendered by the file grok.html. This file contains my rewrite of some of the original code’s functions; this rewrite is selective, but spans all layers from entire landscape to single lines. The picture generated is exactly the same as the original code generates, with two difference: First, instead of random picture every time, I fixed the pseudo-random generator seed to a constant, so the elements of the picture are still guided by random numbers, but it is the same sequence of numbers, and thus, the same picture every time. Second, at the latest stage I changed zoom of the picture a bit, so more o it will be visible.

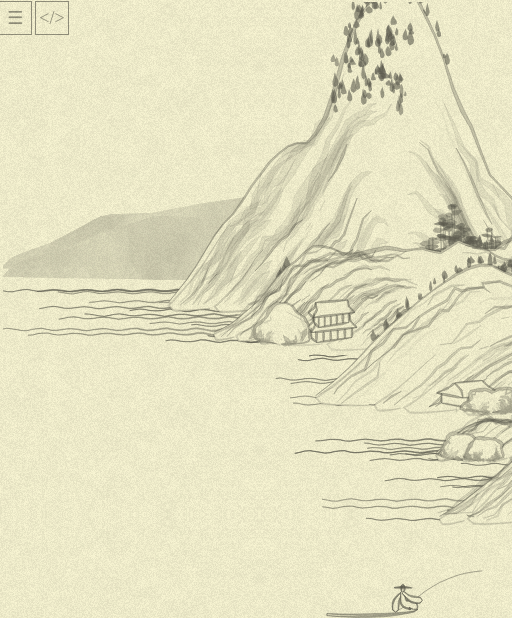

The picture is generated in 512px-wide frames, here is the first one:

The drawing of one frame is performed in chunkloader function, which adds several “chunks” of the canvas to the global STATE.chunks. Function investigation: day 12, day 21.

What chunks should be in the frame, is decided by mountplanner function. It returns a list of objects with fields {tag, x, y}, and this objects are then drawn in chunkloader by calling appropriate functions depending on tag. Function investigation: day 13, day 14.

mountplanner, as well as many other functions down the layers, uses Perlin noise generator. This is the algorithm to produce “gradient noise”: sequence of random values such that nearby values would change smoothly:

// take a range of values from 1 to 3 with step 0.2, and produce noise for them:

range(1, 3, 0.2).map(x => Noise.noise(x).toFixed(2)).join(', ')

// '0.69, 0.66, 0.59, 0.58, 0.54, 0.43, 0.44, 0.44, 0.42, 0.39'

Just when I started to work on this project, I stumbled upon an article about producing another kind of generative art: Replicating Minecraft World Generation in Python by Bilal Himite. It explains Perlin noise and its usage for procedural generation much better than I’ve could.

mountplanner can generate these chunk tags:

"mountain": most prominent shape of the picture: distinctive mountain with small trees and houses; it is attempted to be added every 30 pixels, if this point is local maximum of the Perlin noise;"distmount": the gray one in the far background, added every 1000 pixels;"flatmount": indeed a flat one (more like an island) in the foreground, with rocks and complex trees, added with random chance 0.1"boat": well… a boat! added with a chance 0.2.

The algorithm of planning is adjusted by:

- local

registryof “points where mountain center were added” (so no two mountains would have exactly the same center byx) - global

STATE.occupiedXsthat ensures thatflatmount’s center is never planned atxunder the existingmountain… (but then, thisxis adjusted by randomness, so, it is complicated!)

When the chunkloader receives list of tags, it draws them with appropriate functions. For example, mountain tag corresponds to Mount.mountain (also, in this case, water is invoked, so each tall mid-plan mountain has a water attached to it).

Mount.mountain (days 15, 16, 17, 18, 19, 20) first, generates several layers: several arcs inside each other, adjusted by noise to not look artificial:

Outer layer then used to fill the mountain with color and draw outline, and the rest of them—to produce a texture of the mountain. Then, internal function generate called many times to add smaller objects to the mountain. generate receives function parameters: how to choose at which point to draw, and, when chosen, how to draw. E.g., the generate call like this:

generate(

(x, y) => Mount.rock(x + x_offset, y + y_offset, seed, {wid: rand(20, 40), hei: rand(20, 40), sha: 2}),

{if: (_, pointIdx) => (pointIdx == 0 || pointIdx == resolution.num_points - 1) && chance(0.1)},

);

…codes the statement roughly saying “draw a rock at the point (x, y), if it is at the end of the arc (pointIdx is index of currently checked point in the list of layer’s points, e.g. the condition is only true for the first and last points: ends of the arc), and with probability 0.1.”

In general, each kind of objects has their own hand-crafted conditions to “randomly” appear, and their own range of randomness in attributes (like width, height, number of stories in the building, or its rotation), producing surprisingly pleasant and naturally-looking randomness.

There are several objects that can be drawn on a mountain:

- various kinds of small trees:

Tree.tree01toTree.tree03; - various kinds of buildings:

Arch.arch01toArch.arch04, and, somewhat Easter-egg-y,Arch.transmissionTower01; - finally,

Mount.rock.

For example, each tree01 (days 2, 3, 4, 5)

…is created by producing two lists of 10 points each, making two parallel lines, slightly adjusted by noise, and drawing several “blobs” (ellipses, but adjusted by random noise) around the top 6 of them, reducing in size towards the top of the tree.

…is just a set of blobs, with no trunk; and tree03 (day 6)

…is made of one filled polygon of two trunk lines, and, again, some blobs—but smaller than for tree01 and shifted aside from the trunk.

Looking at something different, this is arch02 (days 7 and 11, with days inbetween spent for its various elements):

It is produced by box_ function for walls of each of two stories, and roof for their, well, roofs.

The box_ function (days 7, 8, 9, 10) just calculates lines of the parallelepiped of the house, considering desired size, rotation, perspective, and transparency. The lines (initially represented by two points) are then “extrapolated” into many mid-points with extrapolate (called div in the original code, days 8 and 9); then the line is drawn with the stroke_, imitatating the brush-stroke. The stroke_ (day 10) does this by just calculating two lines that are shifted a bit (by a noise, again) up and down from the points of the box line, and making a thin filled polygon of them, producing the effect of a slightly uneven brash. Finally, the box uses a decorator function (day 10) for wall ornaments, which just calculates a few more line inside the wall specified, and draws them again with a stroke_.

This is the end of what I’ve seen closely. Much more is left outside of the investigation—other architectural forms, larger (foreground) trees, people, boats, etc. But now I feel that it can be guessed/extrapolated from what we already know.

Simplifying, we might say that the main tools of the creation here are:

- simple geometry primitives—lines, ellipses, parallelepipeds (do you love the word already?), arcs—and imagining how “Chinese-painting-looking” elements like trees and pagodas can be described with them;

- randomness in general and Perlin noise in particular, making it all looking hand-drawn;

- probably, a lot of hand-picking and experimenting with ranges of randomness, orders of elements, probabilities, and combinations—to make the picture visually rich each time.

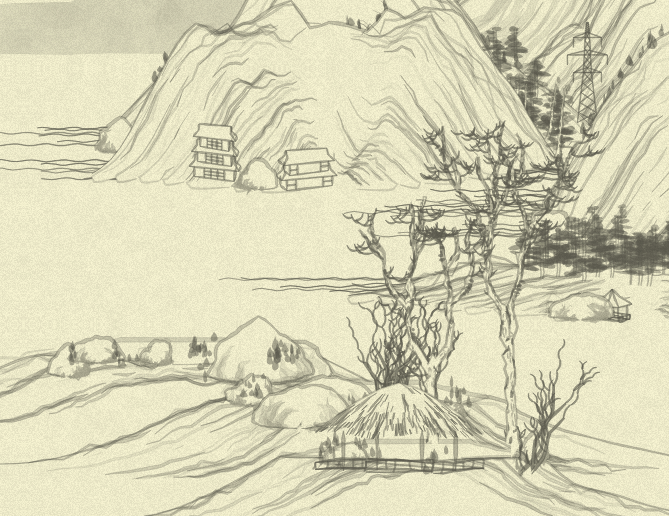

Just for reference, here is another side of the “debug picture”, where we can see several other features:

…but I urge you to go and play with the online example, and adore its variability, made of—as we now know—not that large a number of elements.

Despite the fact that digging into “how it all done” leads to many of ah, it is that simple! moments, my fascination with Lingdong Huang, the original author’s, talent, grew only stronger. This way of seeing things and ability to deconstruct artistic imagination into simple math facts—and construct it back into the picture—I can only adore and envy.

PS: I’ve cheated a bit with timing: this huge last post took a few hours on days 24 and 25. Other than that, the timeboxed experiment worked for me well, and, I hope, was fun to follow!